#Password Recreation Rule 18 solutions

Table of Contents

Password Recreation Rule 18 solutions

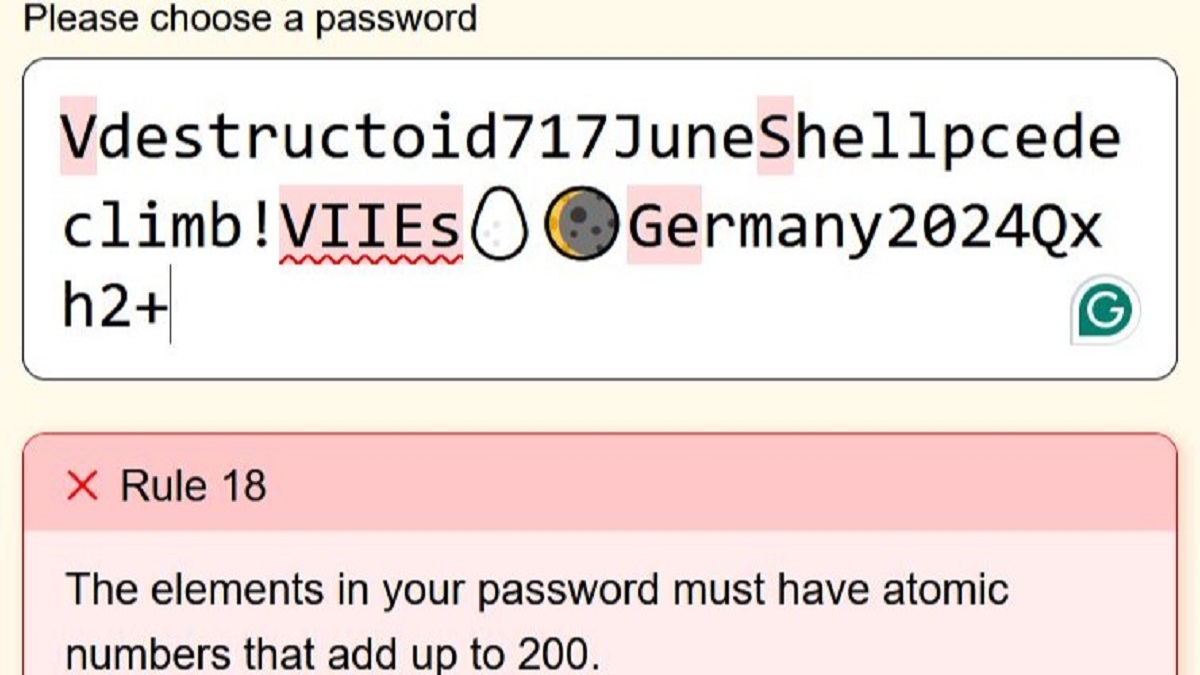

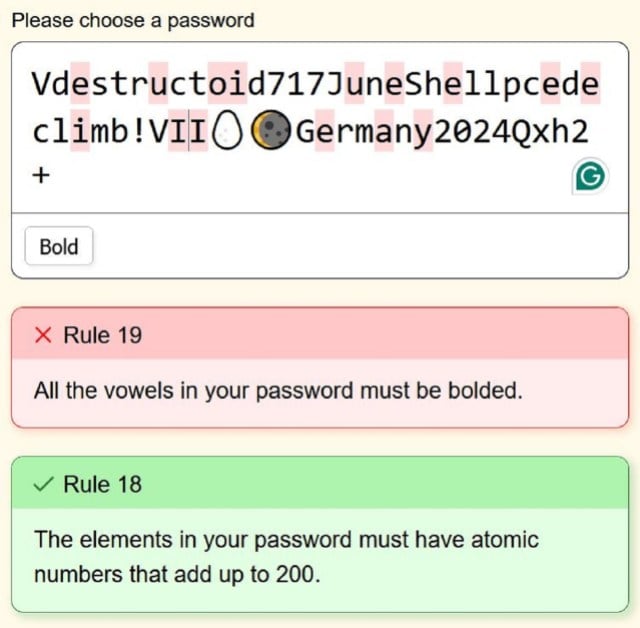

The Password Recreation is painful. It requires so many psychological gymnastics that it could possibly actually crack the mind matter. When you’ve made it to Rule 18, congratulations, I suppose. Right here, you’ll want to search out some Atomic Numbers that add as much as 200.

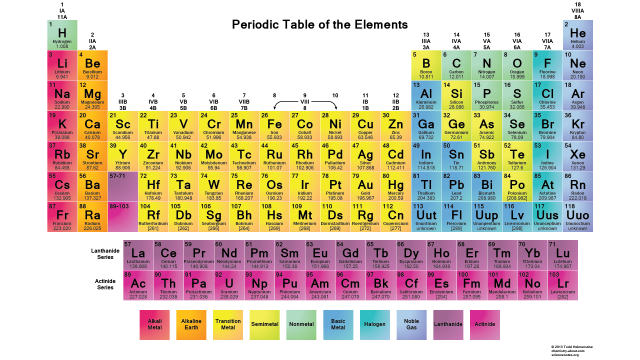

What are Atomic Numbers? Are you acquainted with the Periodic Desk of Parts? It hangs on the wall in mainly each science or chemistry classroom. It reveals every of the chemical parts, with each being assigned a quantity based mostly on the variety of protons discovered within the atom’s nucleus. So, that Atomic Quantity is what we’re right here. Right here’s the periodic desk. Let’s have a look.

So, while you create a password, you’re invariably going to journey over some Chemical parts. It will be really easy proper now for those who might simply plug in EsMd (Einsteinium + Mendelevium), but it surely’s virtually assured to not shake out that approach. The truth is, rule 12 has you utilize an elemental image, so that you positively have one in there. I had truly chosen Es, for rule 12, however by the point I received right here, there have been much more constructed up in my password.

What are the most effective Atomic Numbers for Rule 18 in The Password Recreation?

To echo my colleague who wrote the information on Rule 16, sadly, because of this I can’t simply offer you just a few parts to jam collectively to make a 200. It actually depends upon what you have already got in your password.

The truth is, I had Vanadium+Vanadium+Iodine+Iodine+Sulfur+Germanium+Einsteinium (23+23+53+53+32+16+99=299). Fortunately, the change I needed to make was clear, I simply wanted to take away Einsteinium from the combination and every thing was introduced all the way down to 200 whereas nonetheless satisfying the remainder of my wants.

The issue right here is that Password Recreation will match any Uppercase letter to a component, in addition to any Uppercase adopted by Lowercase mixture that match a chemical factor on the desk. It can spotlight those which can be counted, however even then, it may be unclear. So, in my instance, after I used V and I to make Roman Numerals for Rule 7 and Rule 9, I inadvertently added Vandanium each time I needed a 5, and an Iodine everytime I needed a 1.

If the mixture of all these periodic parts exceeds 200, then you may’t begin subtracting, so that you’re going to wish to vary a few of them. This may be shifting a letter to lowercase, the place it received’t be detected as a chemical factor.

Hopefully, by telling you by explaining the objective, offering a periodic desk, and telling you the place issues can go improper, you may work out find out how to get to 200. I’m sorry in case your mind breaks within the course of. I do know that I’m going to achieve for the Ibuprofen the second I hit submit on this text.

![#

9 E-mail Design Tendencies to Assist You Achieve the Edge Over Your Rivals in 2023 [Infographic] #

9 E-mail Design Tendencies to Assist You Achieve the Edge Over Your Rivals in 2023 [Infographic]](https://www.socialmediatoday.com/imgproxy/eA-BPuUawN6QMAxK66SQ2x4dsorSDpl9e3pPzXN2mAc/g:ce/rs:fill:770:435:0/bG9jYWw6Ly8vZGl2ZWltYWdlL2VtYWlsX2Rlc2lnbl90cmVuZHMyLnBuZw.png)