#Overview: Like a Dragon: Ishin! – Destructoid

Table of Contents

Overview: Like a Dragon: Ishin! – Destructoid

The stage of historical past

Like a Dragon has seen its most important sequence’ star frequently rise within the west, and now we’re getting a remake of 1 that by no means managed to make it over. Like a Dragon: Ishin! is a remake of the 2014 sport, tackling historic fiction by way of the lens of its characters, humor, and motion.

Whereas the anachronistic premise can and does result in some outlandish moments, the excellent news is that for probably the most half, Ishin comes collectively nicely. Except for technical hitches and a few tedium in the primary story, Like a Dragon: Ishin! is an pleasurable romp by way of historical past with all of the weird slice-of-life moments and dramatic fights you might need.

Like a Dragon: Ishin! (PS4, PS5 [reviewed], Xbox One, Xbox Collection X|S, PC)

Developer: Ryu Ga Gotoku Studio

Writer: Sega

Launched: February 21, 2023

MSRP: $59.99

Blade of vengeance

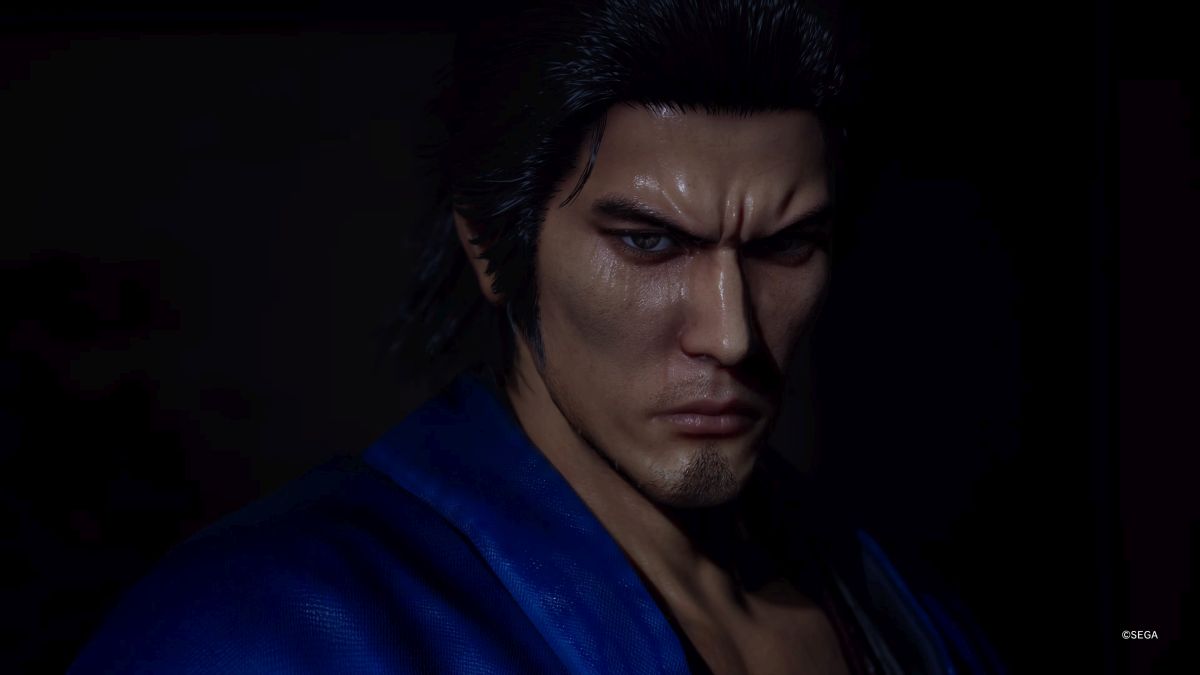

Ishin follows the story of Sakamoto Ryoma, a swordsman returning residence to Tosa from his coaching in Edo. Ryoma is rapidly reunited with adoptive father Yoshida Toyo and sworn brother Takechi Hanpeita, because the three collaborate to overthrow the category system of the land. A masked murderer interrupts the occasions, nonetheless. Framed for homicide, Ryoma flees Tosa and heads to Kyo beneath the guise of Saito Hajime, searching for the attacker and their distinctive preventing fashion.

For many who aren’t keenly conversant in the historical past of Japan, Ishin does happen in and painting the occasions surrounding the top of the Edo interval. However it does so by clearly dramatizing occasions, intertwining and supposing characters within the methods historic fiction does, and utilizing Like a Dragon (née Yakuza) characters as stand-ins for the figures of the period. It’s The Muppets Christmas Carol tackle historical past, however as an alternative of Kermit the Frog taking part in Bob Cratchit, it’s Kazuma Kiryu’s likeness portraying Sakamoto Ryoma / Saito Hajime.

Which means that, very like The Muppets, each character pulls double obligation. Okita Soji is their very own character, however can be a illustration of Majima Goro, Like a Dragon‘s mad canine whose penchant for looking Kazuma Kiryu carries over into Ishin. Usually, these concepts would conflict into one another and trigger friction, however in Like a Dragon: Ishin!, the characters really feel wholly their very own whereas nonetheless offering tons of fodder for long-time sequence followers. Ryoma may be the very best instance of this, carrying on because the well-meaning protagonist always caught up in more and more weird conditions. However it all, someway, works.

The primary story itself can drag a bit, particularly within the center chapters. I used to be certain that, by a sure level within the sport, I had worn a digital path between the Shinsengumi barracks and Ryoma’s room on the Teradaya Inn. And whereas the simmering rigidity of Ryoma trying to find his mark within the wolves’ den is thrilling, the fixed run-arounds these occasions ship you on can really feel tedious.

When it shifts into gear although, Ishin hits the identical speeds that different Like a Dragon sequence entries attain. Whereas no superb again tattoos are bared, the weaponry of this period makes for some dramatic moments nonetheless. Seeing swords conflict and the basic boss textual content slam onto the display with flames erupting, nonetheless hits simply as exhausting. Total, Ishin strikes a very good stability of non-public drama and political intrigue, whereas nonetheless discovering simply the best second to let the music kick in and a fully chaotic brawl to interrupt out.

Stance switching

When fights get away, Ryoma has a reasonably first rate swathe of stances to make use of towards enemies. Positive, there’s the Brawler stance and its fists. However you may as well make use of a sword or gun, or each within the Wild Dancer type, highlighting the altering tides of preventing on this period.

Every stance brings one thing completely different to the desk, and so they felt each assorted and viable sufficient that I stored swapping types all through my playthrough. Positive, the Swordsman type felt probably the most narratively acceptable to grasp and degree up first; however Brawler gave me some actually enjoyable instruments, and the gun-wielding stance was a go-to for coping with the random encounters as I ran world wide. Swords might have been my staple, however I loved spending time mastering the completely different kinds and studying new strikes on the dojos situated round Kyo.

Boss fights are the place the fight clicks the very best, because the motion zooms in and focuses on the tense conflict between Ryoma and his opponent. I used to be much less enthused about some battles in enclosed areas, the place the digicam struggled to trace the motion. In some areas, the cramped hallways and rooms grew to become their very own enemy, as I managed the digicam alongside a swarm of enemies. And although the Warmth Actions are right here and efficient as at all times, even comedic at occasions, I did discover some repetition in them that lessened my want to maintain hitting the Triangle button.

The actual shake-up is within the Trooper Playing cards, which you’ll construct up over the course of the story and act as a second stock of boons to attract from in fights. It’s an attention-grabbing system with some highly effective outcomes. Even simply the starter playing cards Ryu Ga Gotoku Studio arms to you might be stable by way of the sport, from the fast therapeutic and damage-boosting results to a sequence lightning transfer that will tear by way of huge teams.

Constructing these talents and fighters up is without doubt one of the facet actions I’d wish to have spent extra time with, although it finally felt like a method to rapidly out-pace the sport’s issue curve. I by no means even managed to search out a number of the uncommon ones, not to mention any celebrity-themed playing cards, however the ones I had felt like trump playing cards in the best state of affairs. They weren’t insta-win results, however I did desire to make use of my very own weapons to win fights. It feels extra thematically acceptable, you understand? However levelling and increase these Trooper Playing cards looks like one among LIke a Dragon: Ishin‘s most important post-game sinks that I might fall into.

One other life

Different Like a Dragon video games have had loads of substories and facet actions to get misplaced in, and Ishin is not any completely different. A advantage of the strolling back-and-forth Ishin calls for of Ryoma is that it permits him, and the participant, to locate all of the completely different facet actions and bonds that may refill this map. I don’t know if Kyo ever felt as acquainted as Kamurocho, nevertheless it actually has an analogous diploma of life and vigor to it.

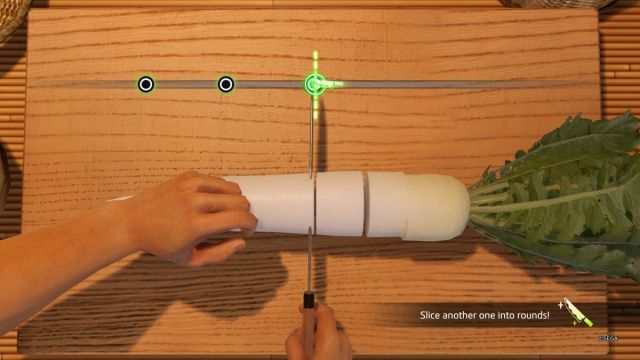

Between story missions, I’d chop wooden or wait in line for notorious inari sushi. I’d tally up the prize tickets I might, flip them in, hoping for a good roll on the sales space. Throughout my time in Like a Dragon: Ishin!, I helped a courier maintain his enterprise alive, taught a person to fish, slashed cannonballs with my sword, and gave a madam a cucumber. It it precisely the sort of weird however oddly heartwarming substories you’d be on the lookout for, right here.

The most important of the facet actions is well the One other Life phase, which sees Ryoma constructing and sustaining a rural homestead with the orphaned Haruka. This principally serves as a money and time sink, with some good outcomes in case you’re seeking to have some first rate well being restoration choices within the late sport. However I did benefit from the peace and quiet of One other Life, and constructing this residence up with my steadily accruing Advantage felt rewarding. It’s no Stardew alternative, nevertheless it’s a pleasant change of tempo, and definitely one I’m extra possible to return over different minigames ( you, Brothel consuming problem).

True to life

One space wherein Like a Dragon: Ishin! suffered in my expertise, although, was the technical division. Enjoying on PlayStation 5, I used to be shocked to find some heavy efficiency dips when utilizing sure talents or preventing in sure foggy results. (Those that could also be conversant in a sure fistfight in a bathhouse will know the areas I’m speaking about.)

A few of these points have been resolved in my pre-release playthrough, whereas others weren’t. We’re keeping track of it and I’ll make a remark right here of any substantial adjustments, however even with a number of the patches that rolled out, I nonetheless bumped into some bizarre technical hiccups. Nothing was general game-breaking, however some have been actually jarring sufficient to notice.

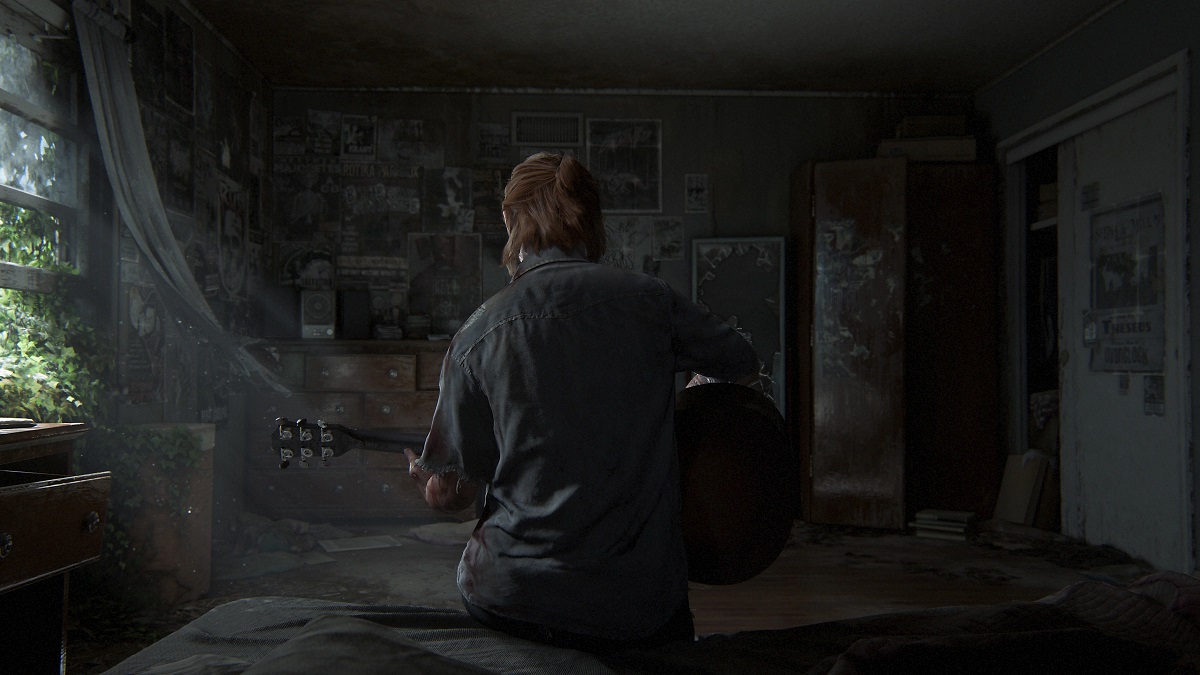

Except for these points, I feel Like a Dragon: Ishin! excels within the huge moments. It’s a historic drama that, very like Okita / Majima, loves when it will get to let unfastened and go loopy. Among the later situations see some completely wild interpretations of each characters and occasions, and you’ll inform the RGG group had some enjoyable imagining how these characters would work together of their new roles.

And, very like the Muppet-driven film I maintain referencing, Ishin is aware of how one can stability the comedic and dramatic in equal measure. I don’t know that the working love plot labored too nicely for me, however most facets of the story stored me driving in the direction of the reality alongside Ryoma. Huge moments can hit fairly exhausting, and little jokes and even simply phrasing from the superb localization stored me chuckling and slamming the Share button.

A special breed of dragon

The Like a Dragon sequence goes by way of some shake-ups, because it not solely shifts away from the Yakuza theming however provides a brand new protagonist to the combination, too. In a method, Ishin looks like a celebration of the sequence up this level, a recognition that these characters are memorable sufficient to make up a forged of characters on this pseudo-stage play of historical past. Including much more faces from Yakuza video games within the sequence, particularly the favored Yakuza 0 and Yakuza: Like a Dragon, as a part of the remake emphasizes this much more.

And whereas there are some hitches, the Ishin remake can look completely splendid at occasions too. Cutscenes particularly had me shocked, as little particulars just like the rain falling on characters or a tear working down one’s cheek floored me. Total, this was a title well-deserving of a remake, and it bought a stable one.

Should you’re a newcomer, you’ll most likely recognize simply the zany mixture of comedy and motion that defines the sequence, and continues to be current right here. Lengthy-time followers will nonetheless discover the issues they like about this sequence in Ishin, too. Whereas there are some setbacks, it’s truthful to say Like a Dragon: Ishin! is an efficient entry within the sequence, hitting the identical highs you’d hope for and full of simply the best stability of coronary heart and laughter that made the sequence stand out within the first place. Ryoma’s story has lastly come west, and it’s nicely price experiencing in case you’re a fan of Ryu Ga Gotoku’s brawlers.