#Shangri-La Frontier impressions — A enjoyable VR MMO story – Destructoid

Table of Contents

Shangri-La Frontier impressions — A enjoyable VR MMO story – Destructoid

A ravishing world

Shangri-La Frontier is by C2C Studio, a Japanese animation studio that has been round since 2006. C2C’s widespread latest works embrace Reincarnated as a Sword and Otaku Elf. I’ve to confess, I solely just lately bought again into anime and have been extraordinarily choosy so I haven’t seen both of these. However, after watching the trailers for each, I’m much more impressed with the visuals in Shangri-La Frontier. That’s to not say the opposite two titles look unhealthy by any means, however SLF is certainly their finest work but by way of visible high quality.

The premise of Shangri-La Frontier is easy sufficient. The primary character Rakuro Hizutome is an odd one, preferring to play scuffed video games which are thought-about trash by others. He finds enjoyment in studying the way to navigate and admire a title’s bugs, versus getting upset by them. However after a latest playthrough of Faeria Chronicle On-line, Rakuro heads to the sport retailer to take a look at one thing new. A poster for Shangri-la Frontier, a massively widespread VRMMO catches his eye. He feels it’s time to attempt what some would think about a god-tier sport, versus a trash one.

It’s enjoyable watching an anime that clearly cares about gaming. Whereas Rakuro is within the native sport store, a “Comedian Frontiers” poster is within the again with the identical font and artwork type as Sonic Frontiers. Not related to the story, however nonetheless enjoyable to see.

Specializing in the main points is what makes SLF entertaining

Whereas creating his character, Rakuro settles on Twinblade Mercenary as his class and chooses Wanderer as his Origin story. His desire to concentrate on buying the perfect weapons and ignoring armor ends in him promoting all his starter armor. Nevertheless, he doesn’t need to be working round bare if everybody can see his face, so he additionally grabs a blue chook masks to cover his face. And so his character, Sunraku, is born. Rakuro logs into Shangri-La Frontier for the primary time, and his journey begins.

It’s right here that the worldbuilding and concentrate on sport mechanics actually begin to kick in. Rakuro begins grinding on Goblins discovered within the forest he spawns in, preserving observe of their spawn charge in addition to the expertise features and merchandise drop charges alongside the way in which.

He additionally encounters a uncommon spawn that provides noticeably extra expertise. Extra importantly, the uncommon spawn has a cool-looking weapon that absolutely offers extra harm than his beginning weapons. Sadly, the uncommon spawn doesn’t drop something on dying, however he’s satisfied the weapon is on his drop desk. Hours later Rakuro is dual-wielding the weapon, over-leveled, and nonetheless within the newbie space. He’s calculated the drop charge at this level however feels it’s time to maneuver on. Higher expertise features are on the market.

I’ve been right here earlier than. I do know that feeling. An accomplishment that’s not so significant within the grand scheme of issues—in spite of everything I’m positive he’ll rapidly exchange the weapons. However within the second, feels so rewarding. Perhaps that’s what’s so intriguing to me about SLF. It doesn’t beeline via every little thing however slightly takes its time and exhibits you all the main points.

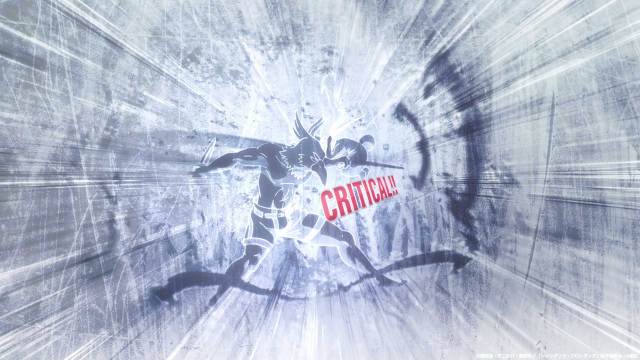

One other glimpse of the place VR MMOs may sometime be

The episode ends with Rakuro extraordinarily excited to tackle a boss greater degree than him that the sport recommends a celebration to take down. For the primary time shortly, he can make the most of his skillset as a gamer to tackle a more durable enemy with out the worry of foolish bugs getting in the way in which. For a second we see a glimpse of Rakuro mendacity in his mattress, fully nonetheless, VR headset on. However with a smile on his face. I’ve to confess, I’m envious of Rakuro at this second. I can’t wait till we are able to discover actually immersive VRMMOs like this.

If you happen to’re a fan of Sword Artwork On-line and Log Horizon, and even simply an MMO fan, I like to recommend testing Shangri-La Frontier. Particularly if you happen to care extra in regards to the particulars, mechanics, and even numbers in relation to that form of expertise. I can inform SLF plans to inform its story whereas constructing the world round it, which is a refreshing tackle the style. I’m not an enormous anime man, however when a present correctly mixes gaming into it, I’m all for it. And SLF does this nicely.

Shangri-La Frontier is offered now on Crunchyroll and can proceed to be simulcast on a weekly foundation.