#AMD unveils (very) restricted version Starfield-themed {hardware}

Table of Contents

AMD unveils (very) restricted version Starfield-themed {hardware}

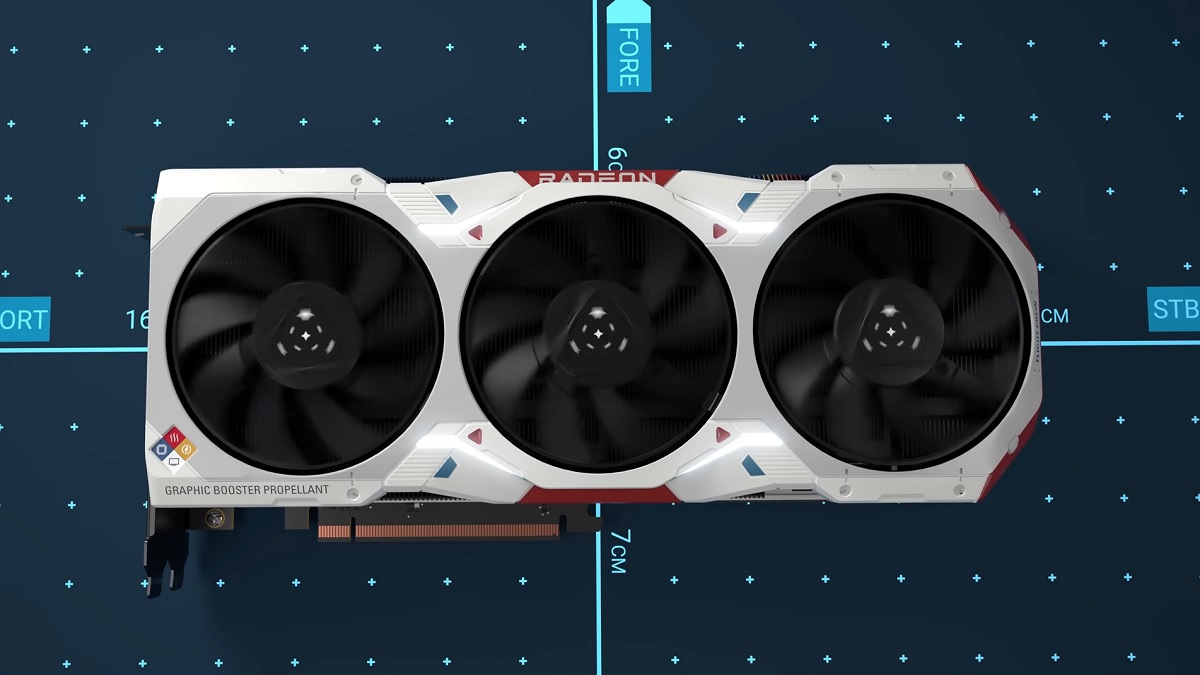

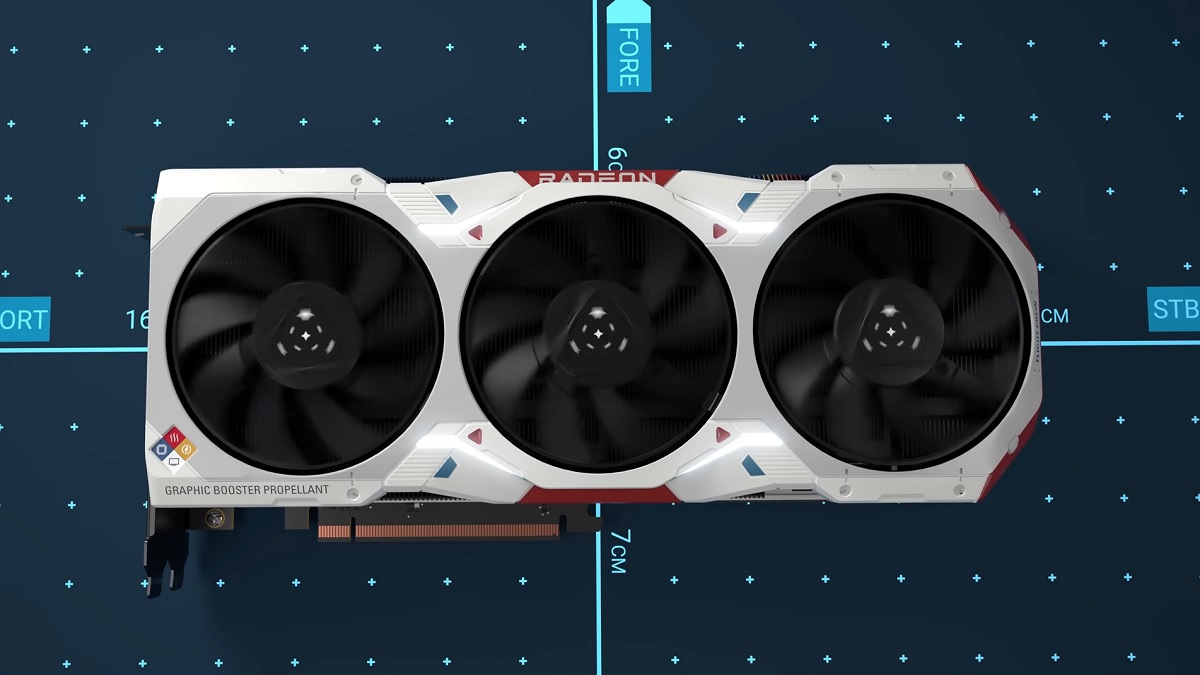

A latest video on the AMD YouTbe channel introduces a restricted version graphics card and processor themed after the upcoming space-faring RPG. Extra particularly, it’s the Radeon 7900 XTX GPU and the Ryzen 7 7800X3D CPU which were given the particular Starfield makeover.

Nevertheless, there are solely 500 of those being made out there. You possibly can take a look at a couple of extra particulars on AMD’s web site. To make issues even trickier, it seems as if solely those that have been in attendance at QuakeCon 2023 might be in with an opportunity of getting maintain of the {hardware}. It doesn’t appear to be they’ll be occurring sale.

So what’s the purpose?

This looks like another means that AMD and Bethesda are cozying up collectively. Just a few weeks again, the tech large introduced that it might be giving freely a free copy of Starfield with purchases of choose graphics playing cards or CPUs.

The sport can also be being optimized for Crew Crimson’s merchandise, on account of a partnership between Bethesda and AMD. As such, some Nvidia or Intel customers is perhaps feeling a little bit bit unnoticed.

With only a few weeks to go now till launch day, Starfield retains its first-class seat on the Hype Practice. There’s so much using on this new IP from the Skyrim and Fallout studio. We are able to’t say for sure how profitable the sport might be when it comes out. Ah, who’re we kidding? It’s going to be huge!