#Starfield’s Sarah Morgan will be your Skyrim companion with this mod

Table of Contents

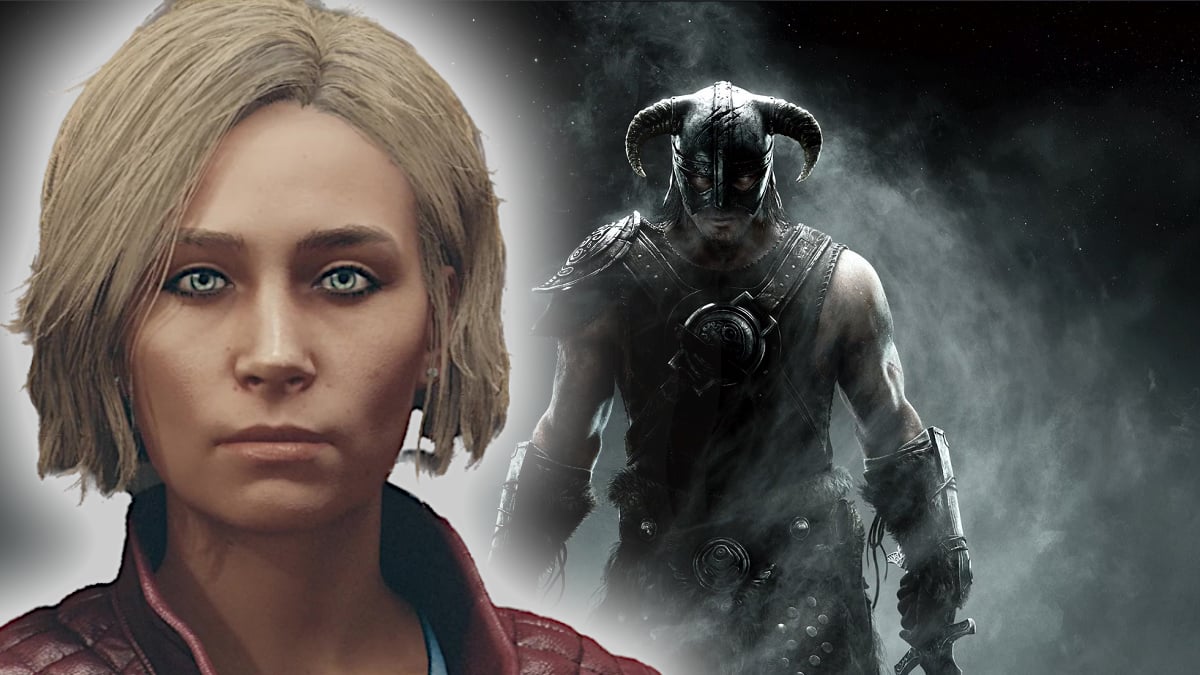

Starfield’s Sarah Morgan will be your Skyrim companion with this mod

If you happen to head over to Nexus Mods, person ndreams has simply launched a mod for Skyrim that permits you to grow to be companions with Starfield‘s personal Sarah Morgan, aka the Chair of Constellation.

It seems to be like a reasonably easy change to The Elder Scrolls 5, including Morgan in as a “vanilla follower” who will be seen sporting a regular miner outfit. Sadly, it doesn’t say whether or not or not she nonetheless retains any of her dialogue, so we could need to assume that she doesn’t.

The place can I discover her?

Like with any follower – in Starfield or Skyrim – you’ll want to hunt Sarah Morgan out earlier than you may have her in your make use of. The mod description web page says she will be discovered wandering the streets of Whiterun in the course of the day, or by the Temple of Kynareth at evening.

You may must do some tinkering earlier than you will get her as a follower. For instance, ndreams recommends some totally different textures. Apart from that, it must be a reasonably simple set up.

Since Starfield got here out only a few weeks in the past, we’ve already seen a ruck of customized mods for the sport. And extra are popping out on a regular basis. Certainly, there’s even been some crossover, equivalent to this one, which is aiming to show the Bethesda RPG into Star Wars.