#MW3 Season 3 continues to neglect Warzone Battle Royale

Table of Contents

MW3 Season 3 continues to neglect Warzone Battle Royale

As with each new season of Name of Responsibility: Warzone, Season 3 is including a ton of recent content material. Gamers can look ahead to new weapons, the return of Rebirth Island, and way more. Nevertheless, there’s one attention-grabbing half that’s been uncared for for fairly a while: normal Battle Royale. Gamers have began to complain, and it’s getting a bit irritating to see such an absence of affection given to the BR recreation mode and Urzikstan map.

All new Season 3 Warzone content material is for Resurgence

The builders lastly gave us a glimpse into what’s coming with the large Season 3 content material replace on April 3. Trendy Warfare 3 multiplayer gamers are ecstatic, as there’s a lot to like in regards to the new content material and modifications. Moreover, those that primarily play Resurgence recreation modes in Warzone needs to be happy too. Nevertheless, the big-map recreation mode, Battle Royale, stays the identical.

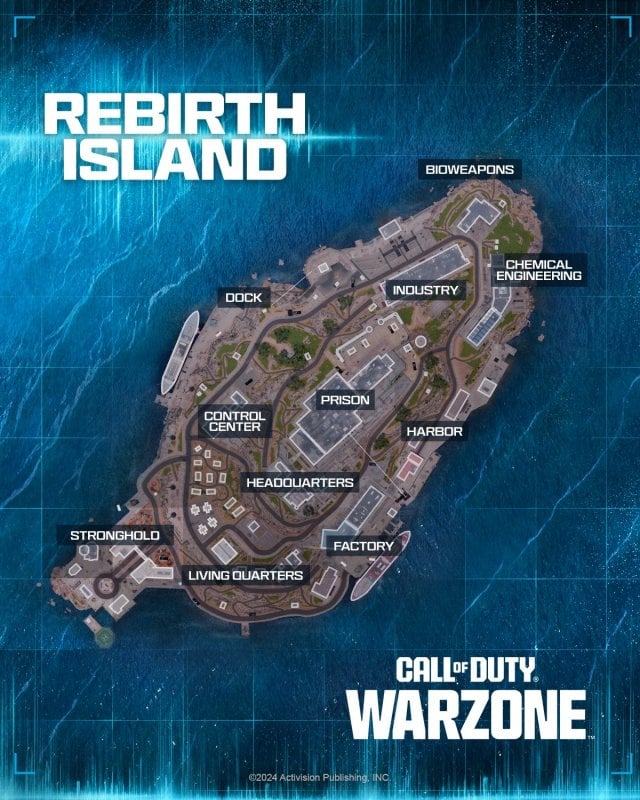

- Rebirth Island, a fan-favorite Resurgence map is making its return. Some modifications to the map will maintain it recent whereas offering the identical fast-paced gameplay gamers grew to like throughout earlier iterations of the sport.

- New modes and gameplay options embody variants for Resurgence, and Rebirth Island particularly. The sport mode will now have Biometric Scanners, Good Shows, and Weapon Commerce Stations across the map. There may even be variable circumstances throughout completely different instances of the day, a brand new Champion’s Quest, and ‘infil strikes.’ These strikes are supposed to change the gameplay, as they will do issues like knock over the lighthouse and crash it into jail, creating all new methods to combat and transfer across the Factors of Curiosity (POIs).

- Warzone Ranked Play – Ranked play continues with Season 3, solely now it’s shifting from the Fortune’s Maintain Resurgence map to Rebirth Island.

There are additionally a number of different modifications and updates that are all nearly completely made for Resurgence recreation modes. Sadly, it seems to be like gamers like me who love the big-map Battle Royale on Urzikstan are forgotten.

Is something new coming to Urzikstan?

Within the lengthy record of updates coming with Trendy Warfare 3 and Warzone Season 3, solely a choose few apply to Urzikstan. The primary is a brand new recreation mode known as Boot Camp, which is precisely what it appears like. It’s a Warzone coaching floor for brand spanking new gamers, the place they will drop in and combat towards bots within the common Battle Royale recreation mode. In quads-only, 20 gamers and 24 bots will face off in a noob-friendly model of the Urzikstan recreation mode. Participant, weapon, and battle cross XP could be very restricted on this recreation mode so skilled gamers can’t farm for quick leveling.

Subsequent, Urzikstan and Vondel are each getting a brand new occasion added to their gulag system. Combating to the demise is not the one choice, as each gamers can now agree to go away collectively. When two ladders drop from the roof, each gamers can climb out to security, or misinform the opposite and kill them earlier than they escape. An analogous mechanic was current within the gulag of earlier Warzone variations, which was eliminated and now added again.

Lastly, some bunkers have now opened on Urzikstan, as they did on Verdansk and Caldera previously. What’s inside is unknown as of but, however the devs warn of imminent threats lurking within the space. We’d wager there are invaluable rewards inside, in the event you’re in a position to get previous no matter is in the best way. As with each BR map to this point, Urzikstan wanted its bunker to open, get loot, and conceal in. Although it doesn’t present something new to gameplay or the map itself, it’s a simple approach for the devs to get ‘new content material’ into Urzikstan.

Warzone Battle Royale wants the Resurgence therapy

As you’ll be able to see, there’s fairly a bit extra vital content material coming to different recreation modes for Season 3. The usual Battle Royale gamers are undoubtedly feeling neglected. The record of lacking content material accessible elsewhere continues rising with every passing season.

- Ranked mode continues to be solely accessible in Resurgence. And not using a big-map ranked mode in Season 3, it seems to be like we’re, at minimal, one other few months away from ever seeing it once more.

- One map continues to be the one choice whereas enjoying Battle Royale recreation modes. Resurgence gamers now have one other map, Rebirth Island, to take pleasure in alongside Vondel, Fortune’s Maintain, and Ashika Island. Urzikstan isn’t dangerous, however would anybody complain a couple of rotation of it with Verdansk and Caldera? Or possibly even a model new map?

- A scarcity of POI modifications or the brand new infil strikes occurring on Rebirth Island. Though bunker entry is all the time a enjoyable Easter egg to find with some cool rewards, it doesn’t change the best way the map performs. Urzikstan has gone largely unchanged for a very long time. It wants new or closely altered POIs, which seemingly might occur the identical approach it’s on Rebirth Island primarily based on time of day and different components. Although that might clearly be on a a lot bigger scale.

- No new or thrilling gear, like a steadily advised Blackout grapple gun or one other modern, game-changing merchandise. Even when issues come out, it’s usually recycled, just like the moveable purchase stations from Caldera.

Some huge identify streamers have even taken to Twitter to voice their displeasure with the dearth of modifications or ranked mode in big-map Battle Royale. One even stated it looks like the devs are “going all-in on Resurgence,” which is definitely a tough level to argue. Has Name of Responsibility actually forgotten about normal Battle Royale? Or is one thing large within the works that we’re not allowed to find out about but? We hope it’s the latter, however we’ll have to attend till at the very least Season 4 earlier than something thrilling in Warzone can come to fruition.