# Meta’s More and more Counting on AI to Make Selections About Consumer Expertise Components

As highlighted by Meta CEO Mark Zuckerberg in a current overview of the impression of AI, Meta is more and more counting on AI-powered techniques for extra facets of its inner improvement and administration, together with coding, advert focusing on, threat evaluation, and extra.

And that might quickly change into an excellent greater issue, with Meta reportedly planning to make use of AI for as much as 90% of all of its threat assessments throughout Fb and Instagram, together with all product improvement and rule modifications.

As reported by NPR:

“For years, when Meta launched new options for Instagram, WhatsApp and Fb, groups of reviewers evaluated potential dangers: May it violate customers’ privateness? May it trigger hurt to minors? May it worsen the unfold of deceptive or poisonous content material? Till lately, what are recognized inside Meta as privateness and integrity critiques have been performed nearly fully by human evaluators, however now, in keeping with inner firm paperwork obtained by NPR, as much as 90% of all threat assessments will quickly be automated.”

Which appears doubtlessly problematic, placing lots of belief in machines to guard customers from a number of the worst facets of on-line interplay.

However Meta is assured that its AI techniques can deal with such duties, together with moderation, which it showcased in its Transparency Report for Q1, which it printed final week.

Earlier within the yr, Meta introduced that it could be altering its method to “much less extreme” coverage violations, with a view to lowering the quantity of enforcement errors and restrictions.

In altering that method, Meta says that when it finds that its automated techniques are making too many errors, it’s now deactivating these techniques fully as it really works to enhance them, whereas it’s additionally:

“…eliminating most [content] demotions and requiring higher confidence that the content material violates for the remainder. And we’re going to tune our techniques to require a a lot greater diploma of confidence earlier than a chunk of content material is taken down.”

So, primarily, Meta’s refining its automated detection techniques to make sure that they don’t take away posts too swiftly. And Meta says that, to date, this has been a hit, leading to a 50% discount in rule enforcement errors.

Which is seemingly a optimistic, however then once more, a discount in errors may also imply that extra violative content material is being exhibited to customers in its apps.

Which was additionally mirrored in its enforcement knowledge:

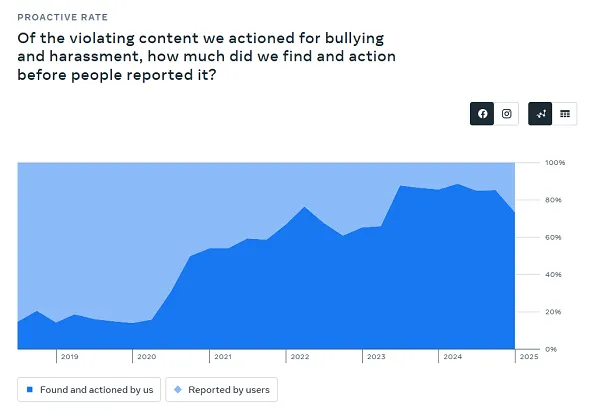

As you may see on this chart, Meta’s automated detection of bullying and harassment on Fb declined by 12% in Q1, which signifies that extra of that content material was getting by means of, due to Meta’s change in method.

Which, on a chart like this, doesn’t appear like a major impression. However in uncooked numbers, that’s a variance of thousands and thousands of violative posts that Meta’s taking quicker motion on, and thousands and thousands of dangerous feedback which are being proven to customers in its apps because of this variation.

The impression, then, might be important, however Meta’s trying to put extra reliance on AI techniques to know and implement these guidelines in future, so as to maximize its efforts on this entrance.

Will that work? Nicely, we don’t know as but, and this is only one side of how Meta’s trying to combine AI to evaluate and motion its varied guidelines and insurance policies, to higher shield its billions of customers.

As famous, Zuckerberg has additionally flagged that “someday within the subsequent 12 to 18 months,” most of Meta’s evolving code base will probably be written by AI.

That’s a extra logical utility of AI processes, in that they’ll replicate code by ingesting huge quantities of information, then offering assessments primarily based on logical matches.

However whenever you’re speaking about guidelines and insurance policies, and issues that might have a huge impact on how customers expertise every app, that looks like a extra dangerous use of AI instruments.

In response to NPR, Meta stated that product threat assessment modifications will nonetheless be overseen by people, and that solely “low-risk selections” are being automated. Besides, it’s a window into the potential future enlargement of AI, the place automated techniques are being relied upon increasingly more to dictate precise human experiences.

Is that a greater means ahead on these parts?

Perhaps it would find yourself being so, however it nonetheless looks like a major threat to take, after we’re speaking about such an enormous scale of potential impacts, if and after they make errors.

Andrew Hutchinson

![#

Google Offers New Overview of Key Show Community Greatest Practices and Ideas [Infographic] #

Google Offers New Overview of Key Show Community Greatest Practices and Ideas [Infographic]](https://www.socialmediatoday.com/imgproxy/2ddZUevHZXiT7_iRCob1WNh0ZyGb8Dryr-h91-qV8Q0/g:ce/rs:fill:770:364:0/bG9jYWw6Ly8vZGl2ZWltYWdlL2dvb2dsZV9hZF9tYW5hZ2VyMi5wbmc.png)

![#

7 Steps to Guarantee Your Advertising Message Lands Completely Each Time [Infographic] #

7 Steps to Guarantee Your Advertising Message Lands Completely Each Time [Infographic]](https://www.socialmediatoday.com/imgproxy/6jLrz4q0vd5utbLki94_Ww92SsBSgIf4uUMRW2qFtpo/g:ce/rs:fill:770:435:0/bG9jYWw6Ly8vZGl2ZWltYWdlLzdfc3RlcHNfdG9faW1wcm92ZV9tYXJrZXRpbmdfbWVzc2FnaW5nMi5wbmc.png)